using cinebench and geekbench to get 98% bare metal performance on a Threadripper 1900X

Updates

After reading this, be sure to check out the follow-up article where I do some benchmarks in a single NUMA zone, and a subsequent post with some changes in December 2018.

Background

After an unfortunate electrical incident, it was time to retire my old 64GB Xeon E5 2670 (v1) and move onto pastures greener.

I often run Windows VMs using GPU passthrough for various reasons - most notably, playing games that do not support Linux natively. As a Linux system developer, I want to be able to use only ZFS for my storage. This gives me native replication, checksumming, and transparent compression that is basically penalty-free.

Looking into various new hardware configurations, it was obvious to me that I was going to have to upgrade to DDR4 memory, no matter what configuration I went with - as it didn’t make sense to reinvest in older DDR3 hardware and keep the Xeon E5 running for another couple years.

The newer Xeon E5 series have a gimped PCIe lane count and as someone using a lot of them (2x GPU, 1x sound card, 1x 10Gb NIC), it makes the platform barely usable and leaves no room for future expansion.

Ryzen 5 and 7 looked like attractive choices but the PCIe lane count issue comes into play again, though at a much lower price point than an equivalent Intel SKU. Remember - Intel segments their ECC support into much higher price points.

Enter Threadripper, AMD’s new professional/enthusiast platform.

Specifications

- Motherboard: ASUS X399a PRIME

- Enable ACS, IOMMU+IVRS, SEV

- CBS -> DF -> Memory Interleave = Channel (enable NUMA)

- CPU: Threadripper 1900X Stock & OCed to 4.1GHz

- Memory: 32GB & 64GB - 2x & 4x 16GB Kingston KVR24E17D8

- GPUs:

- NVIDIA 1050ti 4GB

- AMD RX460 4GB

- Storage: zfs-9999, native encryption

- 2x 500GB SSD (Crucial MX500, EVO 850)

- 4x 5TB Seagate SMR + 4x 5TB Seagate SMR

- Kernel: 4.15.8-gentoo

- Threadripper reset patch

- Temperature offset fix to subtract 27C from reported die temp

- Disable SME in BIOS or boot

mem_encrypt=offsince it will prevent GPU from initialising - QEMU: 2.11.1

- AMD Ryzen/EPYC QEMU patchset in /etc/portage/patches/app-emulation/qemu/

- OVMF (Win10+Q35), SeaBIOS (Win7+i440FX) both working

- libvirt xml used to set vendor id for NVIDIA tomfoolery

- Guests have shared access to all threads and 8GB (when host has 32GB) or 16GB (when host has 64GB) memory

- Ubuntu 16.04 with UEFI

- Windows 10 on UEFI + Q35

- Windows 7 with SeaBIOS + i440FX

Patches

Linux kernel

The crucial component is the PCI reset patch; without this, QEMU wouldn’t even start. The kernel printed PCIe errors (ACS violations, oh no!) and then crashed the host kernel after slowing it down to a halt.

QEMU emulator

Patches are needed to expose the complete CPU core, thread, and cache topology to the guest so that it (hopefully) maintains lower memory latency and avoids “far” accesses.

Note: As of this writing, AMD is still looking into CCX coreid problems with the lower core count Zen chips.

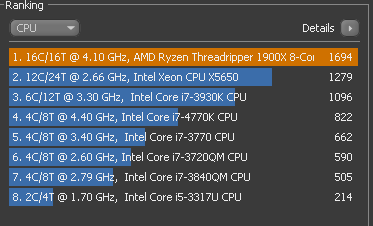

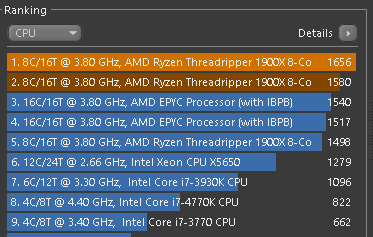

Initial benchmarking

Windows 10

- Geekbench3 (x32) score: 3874 (single-core), 29803 (multi-core)

- Geekbench4 (x64) score: 4143 (single-core), 17733 (multi-core)

- Cinebench R15: 1540 peak with averages in late 1400s/early 1500s

Windows 7

Windows 7 does not want to run Geekbench (3 or 4) because of some kind of “Internal Timer Error” that indicates 8 second of drift between wall clock and high precision timer. I haven’t been able to figure this one out.

Ubuntu (guest)

Geekbench scores in the Ubuntu guest were much better than the Windows guests, but not anywhere close to the reported figures for the 1900X.

Gentoo (host OS)

My Geekbench scores from Gentoo were lower than those published by others on Windows for the same CPU - couldn’t figure this one out either. I assumed it was just due to platform differences that result in useless numbers for cross-platform comparison. So much for being touted as a cross-platform test - right?

Doubting the results

Comparing results to others'

I looked up others’ comparison of Geekbench scores in Windows vs Linux, and even KVM vs host - their results seemed much more sensible than mine.

I started up an Ubuntu 16.04 VM to run some comparison and get a bit more insight into the CPU topology and caches. I was able to push 95-98% of bare metal performance in the Ubuntu guest, but my host still wasn’t getting expected performance.

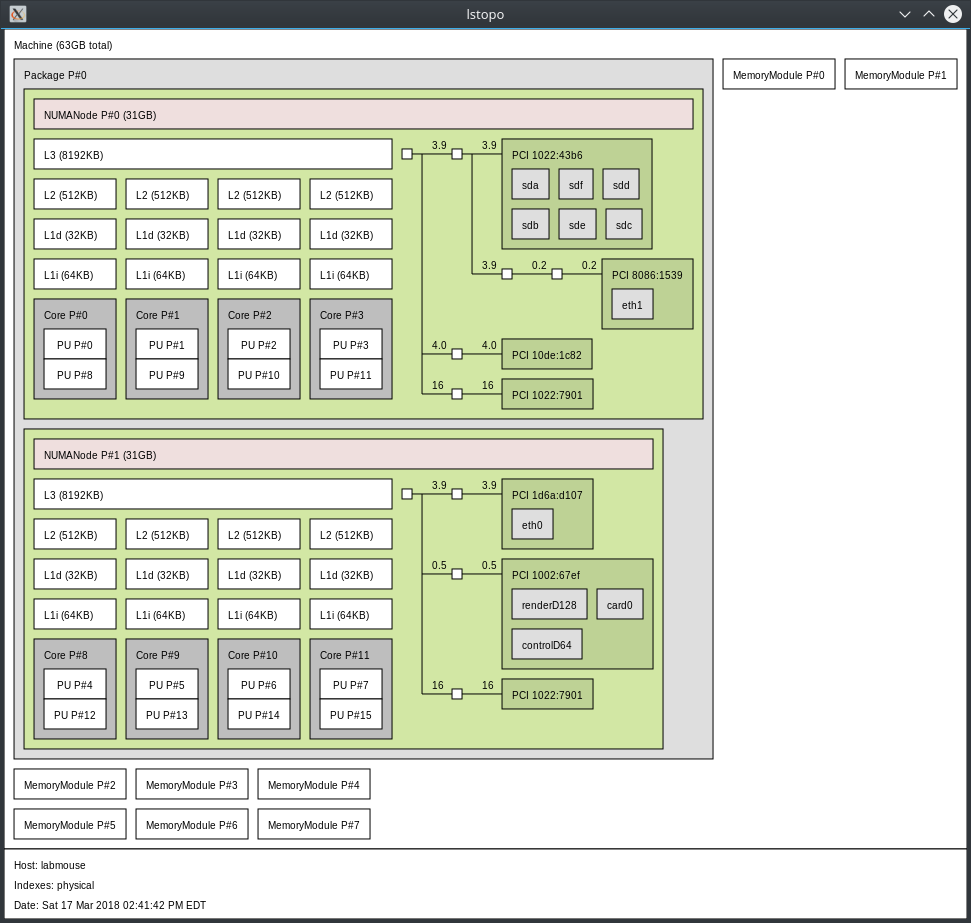

One interesting thing is that the host Gentoo environment saw 2 NUMA nodes on the single 1900X CPU and 2x 8192kB L3 cache.

The guests see 1 NUMA node and 1x 8192kB L3 cache - half of the host’s available resources. AMD has not given an explanation, but seemed to think this is expected. see note below about NUMA configuration

Testing all the parameters

Richard Yao posted a very relevant note to Reddit describing his own results achieving a low latency VR experience in Windows 7 on an older Xeon system. In his guide, disabling HyperV charms is essential because his testing showed that they weren’t helpful - but actually detrimental to performance.

HyperV Enlightenments

The only charms being enabled by default for libvirt Windows 10 installations (in this release) are hv_spinlocks, hv_apic, hv_acpi, and hv_relaxed.

Disabling them did not help performance - in fact, removing hv_spinlocks harmed the synthetic CPU test results.

QEMU Chipset

I thought perhaps the Q35 chipset code was immature and started a snapshot of the VM using i440fx but did not get any improvement in scores - they reduced, instead.

Removing the USB tablet device was something I had overlooked, however, and that did result in a modest improvement.

Enable AMD aVIC for kvm_amd

When reading Richard Yao’s article, I saw mention of APICv and looked up any AMD equivalent. I found that aVIC does exist and has been implemented in kvm_amd but it is disabled by default.

To enable this in Gentoo, I added to /etc/modprobe.d/kvm_amd.conf

options kvm_amd npt=1 nested=1 avic=1

The options for vgif and vls might be of consideration to those doing virtual networking via SR-IOV - as I understand it, anyway. Please let me know if you have used vGIF or VLS.

A different (cooler) approach

I noticed the rather odd idle temperature of 51C and thought maybe the Noctua TR4 SP3 air cooler was inadequate, or at the very minimum, thermal paste improperly applied (which is easy to do with such a huge die area!).

I removed the Noctua and installed an EKWB TR4 waterblock with some custom loop components from my Xeon E5 using EKWB fluid.

The idle temperature was still reported as 49C so I went in search of answers and found that AMD has confirmed a 27C offset for the TR CPUs - a one-line kernel patch (linked earlier) will report proper temperature via lm_sensors.

So now that I know the system idles at 29C instead of 51C I had to look at other options.

Note: My workspace ambient temperature is on average 18C

Scouring the internet for other benchmarks

I looked in the Geekbench database for any other Threadripper 1900X results and found that most of the results were within 2-10% of mine, with the exception of someone running MacOS that is a total outlier.

Maybe everyone else is overclocking..?

I enabled the ASUS overclock options in UEFI and re-tested, which resulted in 99% to 110%+ of the reported benchmark performance from others’ Geekbench reports.

At this point, I was satisfied that the host hardware and environment was functioning as expected. But why the guest reported a Geekbench score of only 17000 still mystified me..

CPU, guv’nah?

Richard Yao proposed that CPU clock scaling was affecting my benchmark scores.

Indeed, atop made it look like the CPUs were only 30% utilized during certain parts of Geekbench, and as a result, the aggressive conservative CPU governor may be causing reduced SMP performance.

Unfortunately, using the performance governor only caused an increase in power consumption, for marginal performance gains boosts performance of benchmarks but is probably not worth the extra cost in utilities. ondemand or conservative both have respectable levels of performance.

Hugepages, maybe?

The Internet suggested that configuring QEMU to use hugepages would reduce CPU overhead by reducing the amount of time spent managing excessively large page tables.

Unfortunately, as my system had only 32GB DDR4 (2x 16GB), I couldn’t really create a large enough pool to assign 8GB to Windows and still run everything else comfortably.

I did, though, in the spirit of science, shut down Xorg and all other applications on a freshly booted system to create 8GB of hugepages and start Windows 10.

However, no soup for you. It didn’t really do much and it could harm performance for certain tests. Why? Who knows. It looks like memory latency climbs a bit higher.

hugepages definitely helps with memory performance but not across all workloads - Cinebench doesn’t produce a measurable benefit, but Geekbench does.

Adding more memory

DDR4 is expensive, so obviously you don’t want to spend more than you have to. I found that the best prices in February 2018 at the time I purchased, were 16GB DIMM for $263. As 8GB DIMM were more than half the cost, and I require a high capacity for my day-to-day work, it just made sense to go with 2x 16GB DIMM to start.

I exactly sure how much DDR4 memory clock frequency mattered to CPU performance tests in KVM but I did find some bare metal tests in Phoronix that clock rate is not as important as the number of channels.

I ordered another 2x 16GB DIMM to bring the total to 64GB 2400MHz DDR4 ECC and re-ran my benchmarks; with this configuration, the Windows VM achieves 98% of bare metal host performance.

NUMA NUMA (Remember THAT video?)

As I mentioned earlier, my original setup with Gentoo on 32GB (2x16GB) exposed two NUMA nodes to the host Linux kernel with proper CPU mappings for each:

NUMA node0 CPU(s): 0-3,8-11

NUMA node1 CPU(s): 4-7,12-15

After adding the 2nd set of 16GB DIMM, I saw the 2nd NUMA node disappear and it gave improper mappings:

NUMA node0 CPU(s): 0-15

This struck me as curious, since the benchmarks were registering an improvement from quad channel while simultaneously kicking the system memory latency to about 140ns. That’s quite a bit worse than the 87ns I observed earlier.

Reenabling NUMA mode in UEFI

Scouring the internet for information led me to this post from AnandTech that helped me find the relevant UEFI knob to tune.

In my case, under Advanced -> CBS -> DF there is a Memory model item that has choices like auto | distribute | channel. In our case, we are interested in channel mode, as it will expose NUMA information to the host once more.

It’s odd to me that “the default” is supposed to be UMA mode, but just by adding more memory I “accidentally” changed this.

Bottom line - if you’re running Ryzen, you really want to be in NUMA (channel) mode.

Configuring guest NUMA

While enabling NUMA got me back to the 87ns latency reported in my original testing, the Windows 10 VM still sees an average of 110ns.

As described in the Redhat documentation for libvirt here, we can use lstopo to visually inspect topology and assign the correct IDs to each vCPU:

And the following can be applied to libvirt XML for the domain to give it NUMA nodes (adjust the CPU values according to the output of lstopo):

<memoryBacking>

<hugepages/>

</memoryBacking>

<vcpu cpuset='0-15' placement='static'>16</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='0'/>

<vcpupin vcpu='1' cpuset='8'/>

<vcpupin vcpu='2' cpuset='1'/>

<vcpupin vcpu='3' cpuset='9'/>

<vcpupin vcpu='4' cpuset='2'/>

<vcpupin vcpu='5' cpuset='10'/>

<vcpupin vcpu='6' cpuset='3'/>

<vcpupin vcpu='7' cpuset='11'/>

<vcpupin vcpu='8' cpuset='4'/>

<vcpupin vcpu='9' cpuset='12'/>

<vcpupin vcpu='10' cpuset='5'/>

<vcpupin vcpu='11' cpuset='13'/>

<vcpupin vcpu='12' cpuset='6'/>

<vcpupin vcpu='13' cpuset='14'/>

<vcpupin vcpu='14' cpuset='7'/>

<vcpupin vcpu='15' cpuset='15'/>

<emulatorpin cpuset='0-3,8-11'/>

</cputune>

<numatune>

<memory mode='strict' nodeset='0-1'/>

</numatune>

<cpu mode='host-passthrough' check='partial'>

<topology sockets='1' cores='8' threads='2'/>

<numa>

<cell id='0' cpus='0-3,8-11' memory='8' unit='GiB' />

<cell id='1' cpus='4-7,12-15' memory='8' unit='GiB' />

</numa>

</cpu>

emulatorpin causes libvirt to isolate the emulator’s threads to a single NUMA zone, avoiding long memory access in QEMU itself.

For those not using libvirt, the following commandline arguments may be used:

-numa node,nodeid=0,cpus=0-3,cpus=8-11,mem=6144 -numa node,nodeid=1,cpus=4-7,cpus=12-15,mem=6144

This will assign 6GB to each zone for a total of 12288M (12GB) virtual machine memory.

Finally, acceptable guest + host memory latency!

I used the Ubuntu UEFI guest to break down the difference in performance - geekbench4 results here.

The notable change is that memory latency went from 140ns to 82.6ns.

The host vs guest comparison is available here.

There are a few notable things here.

- The guest only has 16GiB of (hugepage) memory compared to 64 for the host (minus 16 for the hugepages)

- L3 cache in the guest is just 1024kB? Any answers for this, AMD?

- The single core canny test actually performs better in the VM. Probably just a fluke?

- Single core memory latency is lower than in the host (~82ns vs ~85ns)

- Multi core PDF rendering is faster than the host (40135 vs 38139)

- Memory copying is a struggle for VMs and the problem scales up

I’ve gotten better results in Windows but it’s just not the same to compare Linux vs Windows due to compiler differences, so this is a much closer approximation.

Useless comparison to Xeon

| Problem | Ryzen | Xeon |

|---|---|---|

| Requires ACS Override | Some | Some |

| Unsafe IRQ Interrupts | - | + |

| Unstable TSC | - | Mine (DX79TO) |

| PCI Reset Bug | + | kinda |

Some Ryzen chipset require ACS Override patches to break certain devices out of shared IOMMU groups. For example, on many boards, one or more NVM devices, onboard sound/ethernet and my PCIe 1x/4x slots were all unable to be passed through alone.

The same GPU performs much better on X399 vs X79 - presumably not only because of the more modern Zen architecture, but also that the DX79TO had an unstable TSC that would not calibrate.

Intel never fixed it since they claimed Linux is unsupported and there was no good way of demonstrating the issue from Windows. The VM would revert to the slower HPET with its ten-fold increase in overhead..

Summary

Despite the rough edges on initial setup, the long-term prognosis for Threadripper is looking good. Whatever issues we’ve had have been handled by AMD or other parties as fast as they possibly can. It really feels like AMD is invested in its community, while Intel was simply taking theirs for granted.

The end result is 96% of bare metal performance with the lowest possible memory latency.

Disabling NUMA mode can offer higher peak bandwidth at the expense of increased latency, but either mode can translate to reduced performance in certain VM applications. It’s important to know your workload and test appropriately.

I’ve seen suggestions to reduce my VM core/thread count to get higher IPC but the work I do requires all those threads, so, you might do your own testing to see if that would be better for you.

The takeaway here is to ensure you are using quad channel RAM in NUMA mode if your VM performance must behave as close to bare metal as possible. This might mean using 4x to 8x 4GB to 8GB DIMMs instead of 16GB DIMMs to get the best $/GB ratio.