revisiting single zone NUMA performance on gaming VMs

Background

This the third post in a series on VFIO tuning for Threadripper and other NUMA hosts. In my first post, I described how to assign one or more NUMA zones to a libvirt VM on Threadripper and did some basic “benchmarking” to validate raw throughput. Since that time, the guide has gotten much attention from others with larger Threadripper systems - most commonly, complaints came from users running a 1950X or greater, who say their experience didn’t quite match mine, stuttering while running games.

I tried setting up a single NUMA zone VM and ran some benchmarks to see if there was any noticeable benefit in games, but didn’t see any - at least, not at that time.

I eventually noticed some stuttering on my own system, which I must have learned to ignore after years of subpar gaming performance.

Some good reading about storage performance and the evolution of x-data-plane (now obsolete) here.

Specifications

- Motherboard: ASUS X399a PRIME

- Enable ACS, IOMMU+IVRS, SEV

- CBS -> DF -> Memory Interleave = Channel (enable NUMA)

- CPU: Threadripper 1900X Stock (No OC, EPU enabled)

- Memory: 32GB & 64GB - 2x & 4x 16GB Kingston KVR24E17D8 (OC to 2666MHz from 2400MHz)

- GPUs:

- NVIDIA 1060 6G (Guest)

- AMD RX 460 4G (Host)

- Storage: zfs-0.8.0-rc2, native encryption, special metadata/small_block vdev (ZoL #5182)

- 2x 500GB SSD (Crucial MX500, EVO 850)

- 2x 512GB NVMe (Toshiba RD400)

- 2x 4TB SMR

- Kernel: 4.19.2-gentoo

- Threadripper reset patch is no longer needed

- Disable SME in BIOS or boot

mem_encrypt=offsince it will prevent GPU from initialising - QEMU: 3.0.0

- PulseAudio Patch in /etc/portage/patches/app-emulation/qemu/

- libvirt xml used to set vendor id for NVIDIA tomfoolery

- Windows 10 on UEFI + Q35

Other considerations

No longer overclocking the CPU

I haven’t had great luck with stability on overclocks with the 1900X on my X399 PRIME board, so I decided that memory performance was a better target to chase. By doing so, I also reduce CPU voltage and reclaimed 25W from its base power consumption as a result.

Storage configuration

My storage has been revamped - no longer using local SMR rust, but instead two enterprise 4TB disks in a mirror.

I’ve rebuilt the system using the new metadata allocation code so that my storage metadata and small files are written to a ‘special’ mirror vdev of two 512G NVMe SSD. I am using ZFS ZVOL with cache=none set in QEMU.

NAME STATE READ WRITE CKSUM

newjack ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

special

mirror-1 ONLINE 0 0 0

nvme1n1p2 ONLINE 0 0 0

nvme0n1p2 ONLINE 0 0 0

logs

mirror-2 ONLINE 0 0 0

nvme0n1p1 ONLINE 0 0 0

nvme1n1p1 ONLINE 0 0 0

In lieu of a good SLOG device, you can zfs set sync=disabled pool/filesystem to avoid constant fsync from the Windows guest, however, my SLOG allows me to stick with sync=standard.

My OS is segregated to its own pair of mirrored SATA SSD.

Patches

Linux

As of 4.19.2 I have no need for external kernel patches, but I do use the abandoned (though still-working) it87 driver for sensor support.

QEMU

Pre-3.0, patches are needed to expose the complete CPU core, thread, and cache topology to the guest so that it (hopefully) maintains lower memory latency and avoids “far” accesses.

If you can use QEMU 3.0, the AMD CPU changes are already integrated - Intel users don’t need to worry about those, but should still use 3.0 for the improved audio timer subsystem.

There are two new patches currently required for an optimal QEMU experience:

This patch modifies the PulseAudio driver for better performance, mostly eliminating the popping/crackling associated with emulated sound.

Though the host Linux kernel (?) manages link speed properly (and can be shown via lspci output under guest load), some issue with how PCIe link speed is propagated to the guest hardware results in NVIDIA (and possibly AMD) guest drivers failing to completely initialise the card.

I compiled the patches for QEMU 3.0 here, which Gentoo users can drop into /etc/portage/patches/app-emulation/qemu/

QEMU / libvirt configuration

Since I’m no longer assigning both NUMA zones to a single VM, I only want to allocate hugepages on node 0. The default method for allocation (nr_hugepages=... cmdline parameter) will split the allocation evenly across all available nodes.

Instead, I use the following command:

NODE=0

echo 4096 > /sys/devices/system/node/node${NODE}/*/hugepages-2048kB/nr_hugepages

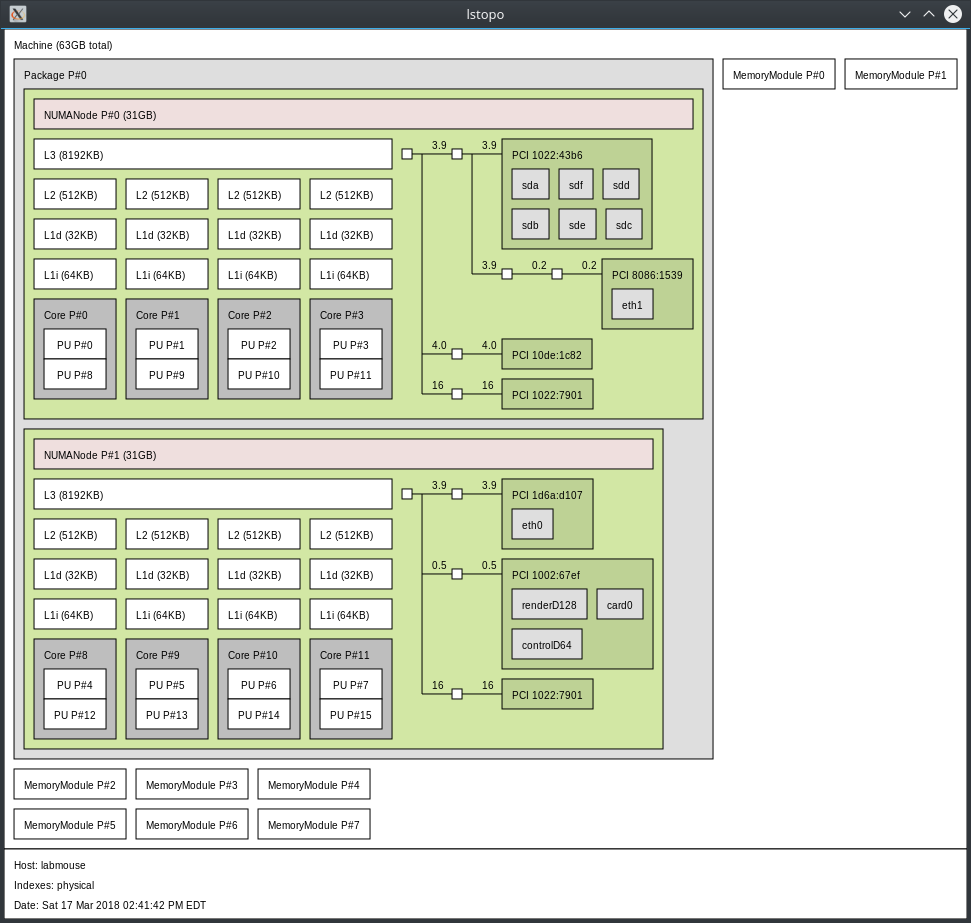

This gives me 8GiB of hugepages on node 0, which is directly connected to my GPU (as evidenced by lstopo). I tested using only node 1, but stutters were evident during gaming.

CPU / IO thread pinning

<memoryBacking>

<hugepages/>

</memoryBacking>

<vcpu placement='static' cpuset='0-15'>8</vcpu>

<iothreads>2</iothreads>

<iothreadids>

<iothread id='1'/>

<iothread id='2'/>

</iothreadids>

<cputune>

<vcpupin vcpu='0' cpuset='0'/>

<vcpupin vcpu='1' cpuset='1'/>

<vcpupin vcpu='2' cpuset='2'/>

<vcpupin vcpu='3' cpuset='3'/>

<vcpupin vcpu='4' cpuset='8'/>

<vcpupin vcpu='5' cpuset='9'/>

<vcpupin vcpu='6' cpuset='10'/>

<vcpupin vcpu='7' cpuset='11'/>

<emulatorpin cpuset='4,12'/>

<iothreadpin iothread='1' cpuset='5,13'/>

<iothreadpin iothread='2' cpuset='6,14'/>

</cputune>

<numatune>

<memory mode='strict' nodeset='0-1'/>

</numatune>

<cpu mode='host-passthrough' check='partial'>

<topology sockets='1' cores='4' threads='2'/>

<numa>

<cell id='0' cpus='0-7' memory='8' unit='GiB' />

</numa>

</cpu>

emulatorpin causes libvirt to isolate the emulator’s threads to a single NUMA zone, avoiding long memory access in QEMU itself.

iothreads requires additional configuration

iothreads and iothreadids allow me to set my two ZFS zvol into their own IO threads on NUMA zone 1 to avoid contention from multiple accesses in zone 0. This requires an iothread parameter to be added to the <disk> section(s):

<disk type='file' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native' iothread='1'/>

<source file='/dev/zvol/pool/vm/diskname'/>

<target dev='vda' bus='virtio'/>

<boot order='1'/>

</disk>

Add a SCSI controller for virtio-scsi

virtio-blk is an old and widely available method (and also the default ‘virtio’ storage backend) but we can add a SCSI controller and enable low-overhead multiqueue IO. If you are using pure NVMe storage, you might want to increase the number of queues until you no longer see improvements in performance:

<controller type='scsi' index='0' model='virtio-scsi'>

<driver queues='8'/>

</controller>

Add a libvirt hook to set QEMU’s CPU scheduler (December 2018)

When running CrystalDiskMark or if Steam were to download a game, my whole VM would start stuttering and become laggy, with the mouse cursor sticking or pausing as it moves around the screen. A user on reddit mentioned setting a FIFO scheduler on the QEMU process. I tested it, and LatencyMon became happier, so I made a libvirt hook to set this on all VMs I start:

#!/bin/bash -x

if [[ $2 == "start"* ]]; then

if pid=$(pidof qemu-system-x86_64); then

chrt -f -p 1 $pid

echo $(date) changing scheduling for pid $pid >> /tmp/libvirthook.log

fi

fi

Create the directory /etc/libvirt/hooks and place that in there with the filename qemu - don’t forget to mark it executable.

Make sure you’re using virtio-input

If you’re passing USB hardware directly, you may be fine, but I use virtio-input which allows me to share the keyboard/mouse between host and guest with basically zero latency. However, if you don’t properly add these devices to the VM, PS/2 emulation fallback is used and performance is lost to timer interrupts:

<input type='mouse' bus='virtio'>

</input>

<input type='keyboard' bus='virtio'>

</input>

You might want to use type='tablet' when passing through absolute pointing devices (i.e. tablet) but if you use a mouse in this mode, scroll wheel and extra buttons probably won’t work.

Ensure you’re using PCIe heirarchy

In a Windows guest, you can check using GPU-Z for the current detected bus interface. It should say PCIe 3.0 x16 @ PCIe 3.0 x16.

Without the PCIe link patch, the NVIDIA control panel will show PCIe 1.0x.

The effective rate is indeed PCIe 2.0 or 3.0 (whatever lspci in the host OS reports) but NVIDIA’s driver didn’t enable memory clock scaling when it somehow detected a PCIe 1.0x interface.

libvirt XML should have something like this:

<controller type='pci' index='0' model='pcie-root'/>

<controller type='pci' index='1' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='1' port='0x10'/>

<alias name='pci.1'/>

</controller>

And then your GPU’s XML needs the bus modified to point to the new root port.

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</hostdev>

If using virt-manager, it appears to automatically create the correct PCIe heirarchy, though, on my system, it created a separate root port for the GPU and its HDMI audio device. Others’ testing has shown this to not matter much, or at all.

QEMU commandline overrides

Using these is considered “unsupported”, but presently the only way to obtain these results:

<qemu:commandline>

<qemu:arg value='-cpu'/>

<qemu:arg value='host,hv_time,kvm=off,hv_vendor_id=fakenews,migratable=no,+invtsc,-hypervisor'/>

<qemu:arg value='-object'/>

<qemu:arg value='input-linux,id=kbd1,evdev=/dev/input/by-id/kbd-event,grab_all=on,repeat=on'/>

<qemu:arg value='-object'/>

<qemu:arg value='input-linux,id=mouse1,evdev=/dev/input/by-id/mouse-event'/>

<qemu:arg value='-set'/>

<qemu:arg value='device.hostdev0.x-vga=on'/>

<qemu:arg value='-set'/>

<qemu:arg value='device.virtio-disk0.config-wce=off'/>

<qemu:arg value='-set'/>

<qemu:arg value='device.virtio-disk0.scsi=off'/>

<qemu:arg value='-set'/>

<qemu:arg value='device.virtio-disk3.scsi=off'/>

<qemu:arg value='-set'/>

<qemu:arg value='device.virtio-disk3.config-wce=off'/>

<qemu:arg value='-global'/>

<qemu:arg value='pcie-root-port.speed=8'/>

<qemu:arg value='-global'/>

<qemu:arg value='pcie-root-port.width=16'/>

<qemu:env name='QEMU_AUDIO_DRV' value='pa'/>

<qemu:env name='QEMU_PA_SERVER' value='/run/user/1000/pulse/native'/>

</qemu:commandline>

I’ve heard that x-vga is no longer required, but I’ve not tested without it lately.

Disabling wce and scsi layers for storage allows ZFS to manage things more directly.

It may be necessary to change the uid from 1000 to something else, depending on the user who runs the VM. You might want to remove the sound parameters altogether if they aren’t needed in your setup at all.

The pcie-root-port arguments will forcibly override the reported link speed to the guest so that the driver behaves as it should.

Setting migratable=no,+invtsc allows us to force use of the TSC which is a more efficient timing source than HPET, though your mileage may vary if you have a broken TSC.

Remember to reenable MSI for devices

Using the MSI_utilV2 Windows utility, ensure that MSI is enabled for each device in the guest.

Summary

Using the above configuration has satisfied my requirements for a stable and performant Windows guest. The framerates I am seeing in games are “shockingly smooth”, and I have used these settings on my old DX79 system with a 1050Ti to eliminate annoying frame loss and stuttering when multi-tasking.